Authors: Abeba Bade, Hussein Mansour, Tobi Oladunjoye

Affiliation: Texas A&M University

Abstract

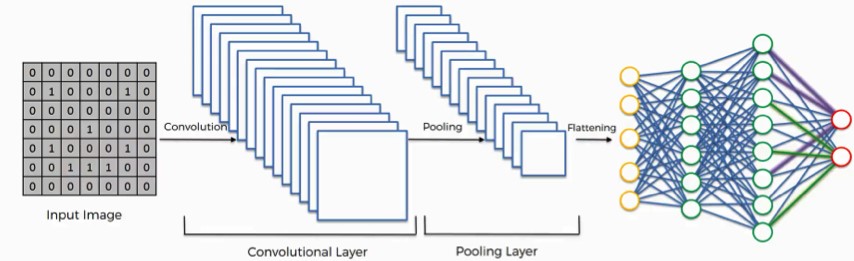

This report details the development and implementation of Convolutional Neural Networks (CNNs) and non-neural network models (NNNs) to classify clothing articles using the famous FashionMNIST dataset and determine the optimal approach for classifying this dataset. The experiment explored Multilayer Perceptron (MLP), Support Vector Machines (SVM) , Random Forest(RF), K-Nearest Neighbors(kNN), Gradient Boosting Machines(GBM) and two Convolution Neural Network(CNN) machine learning modes to classify the images into distinct clothing categories. The two CNN models, 4Convolutional Layers, 4Fully Connected Layers (4XConv- layers 4XfC-layers(CNN-1) and 5Convolutional Layers, 5Fully Connected Layers (5XConv- layers 5XfC-layers(CNN-2) were evaluated.

Introduction

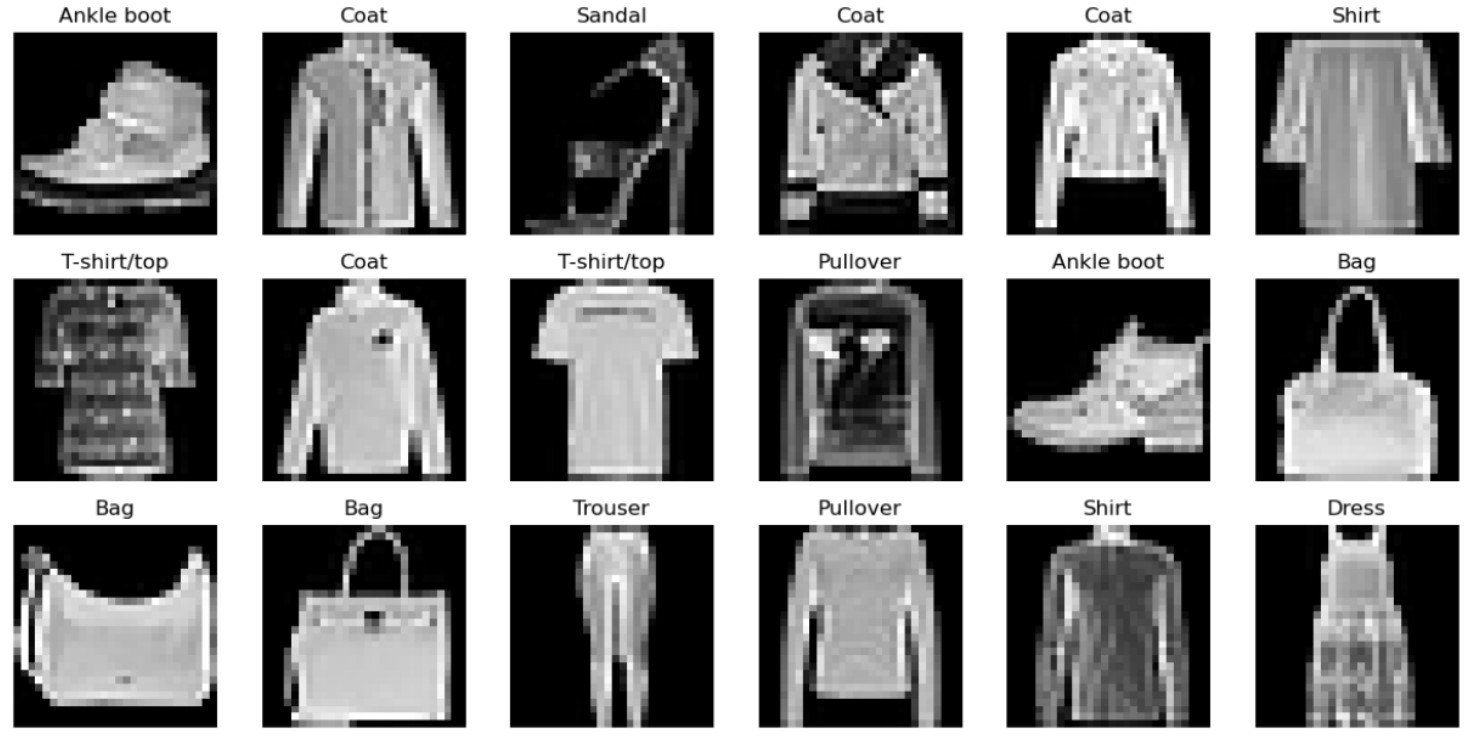

the report detail the use of CNN and NNN algorithms to classify items of clothing from the FashionMNIST dataset. The data set is made up of 70000 images (each28x28pixels in grayscale) distributed equally amongst 10 different classes: T- shirt/Top, Trouser, Pullover, Dress, Coat, Sandals, Bags, and Ankle Boots. The FashionMNIST Dataset for its role as a standard benchmark in testing the efficacy of image classification algorithms, especially CNNs . However, due to the complex nature of clothing properties leading to resemblance between different categories, optimal classification for this dataset is challenging . Clothing item classification serves as a fundamental task in the realm of computer vision. Many research papers suggested CNN-based architecture as inherently suitable for image classification. In machine learning, focusing on a single perfect model makes it harder to understand the details of the dataset and how different methods classify clothes. Exploring different models also lets us think about combining them to improve overall performance. This way of approaching this task makes our classification system stronger and helps us learn more about the patterns in the FashionMNIST dataset. Thus, besides two CNN models, five other NNNs algorithms were employed in order to compare and contrast their evaluations on the dataset: MLP, SVM, RF, KNN and GBM.

Data Preparation, Transformation, and EDA

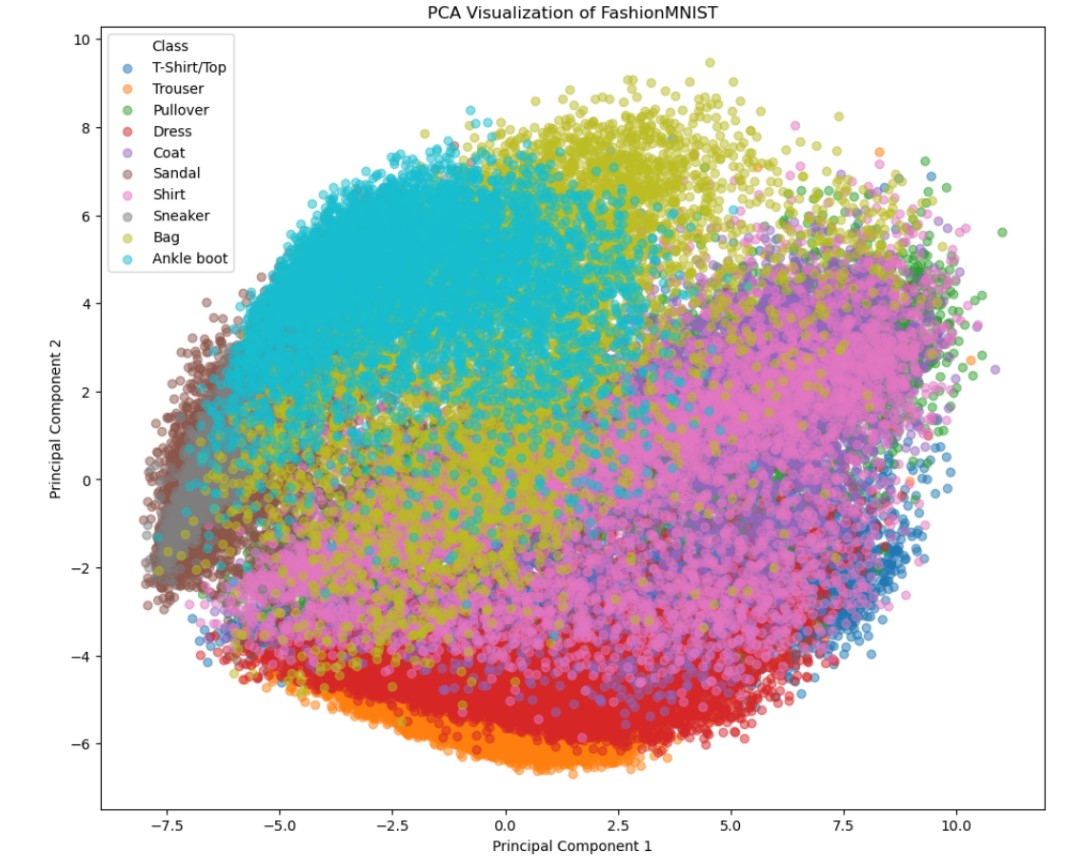

The FashionMNIST dataset underwent several preparation and transformation steps to make it suitable for the classification models. This process involved normalization, scaling, and augmentation techniques to enhance the dataset's diversity and representativeness. Additionally, exploratory data analysis was conducted to gain insights into the dataset's characteristics, such as Principal Component Analysis.

Methods/Experiments

The methodology includes model training, hyperparameter tuning, and performance evaluation. Models were assessed using accuracy, precision, recall, and F1-score. Two CNN architectures, CNN-1 (4 convolutional layers, 4 fully connected layers) and CNN-2 (5 convolutional layers, 5 fully connected layers), were the focus due to their advanced feature extraction capabilities. The training process was conducted using the PyTorch library, with emphasis on data transformation, dataset splitting, and efficient data loading techniques.

Results

| Model | Accuracy | F1-score | Precision | Recall |

|---|---|---|---|---|

| MLP | 0.864 | 0.864 | 0.864 | 0.864 |

| SVM | 0.88 | 0.879 | 0.880 | 0.879 |

| KNN | 0.846 | 0.847 | 0.849 | 0.846 |

| RF | 0.874 | 0.873 | 0.873 | 0.874 |

| GBM | 0.893 | 0.892 | 0.892 | 0.893 |

| CNN-1 | 0.909 | 0.910 | 0.912 | 0.909 |

| CNN-2 | 0.915 | 0.916 | 0.918 | 0.915 |

Conclusion

The study concluded that CNNs outperform NNNs in classifying the FashionMNIST dataset. While both CNN models achieved high accuracy, CNN-2 showed a marginal improvement over CNN-1, indicating that a more complex architecture might lead to better feature extraction but also poses the risk of overfitting.